Abstract

Domain adaptive Object Detection (DAOD) leverages a labeled domain (source) to learn an object detector generalizing to a novel domain without annotation (target). Recent advances use a teacher-student framework, i.e., a student model is supervised by the pseudo labels from a teacher model. Though great success, they suffer from the limited number of pseudo boxes with incorrect predictions caused by the domain shift, misleading the student model to get sub-optimal results. To mitigate this problem, we propose Masked Retraining Teacher-student framework (MRT) which leverages masked autoencoder and selective retraining mechanism on detection transformer. Specifically, we present a customized design of masked autoencoder branch, masking the multi-scale feature maps of target images and reconstructing features by the encoder of the student model and an auxiliary decoder. This helps the student model capture target domain characteristics and become a more data-efficient learner to gain knowledge from the limited number of pseudo boxes. Furthermore, we adopt selective retraining mechanism, periodically re-initializing certain parts of the student parameters with masked autoencoder refined weights to allow the model to jump out of the local optimum biased to the incorrect pseudo labels. Experimental results on three DAOD benchmarks demonstrate the effectiveness of our method. Code can be found at MRT Codebase.

Problem Definition

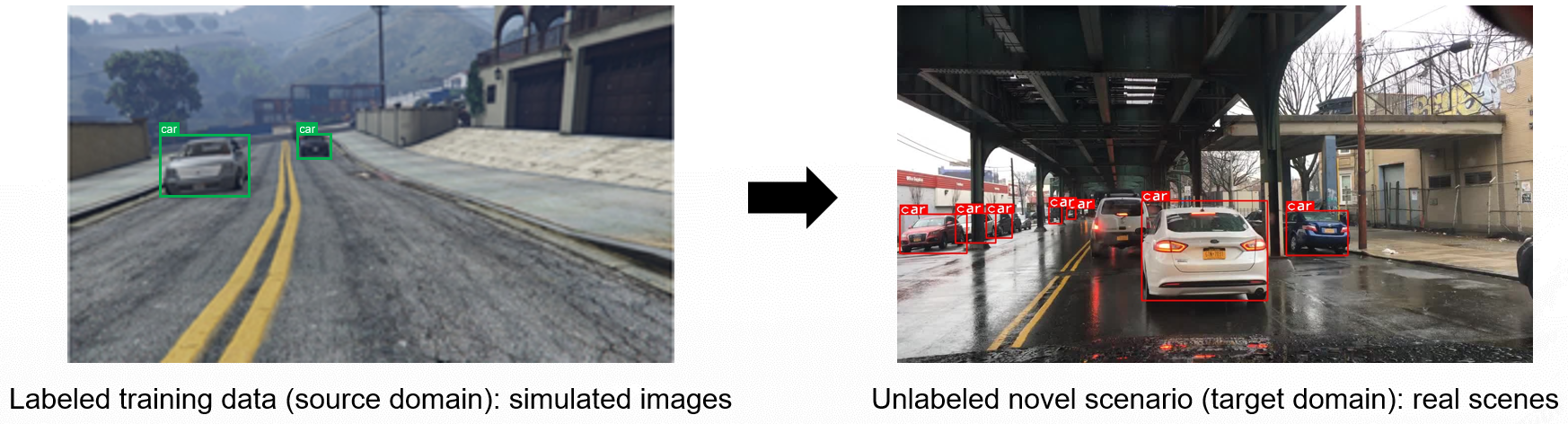

In real-world applications, there exists a distribution gap between training data (source domain) and the deployed environment (target domain). For example, the model trained on sunny weather may face a significant performance drop when applied to foggy weather scenes. Unsupervised Domain Adaptive Object Detection aims to generalize the model to target domain without additional annotations.

Method Overview

Overview Masked Retraining Teacher-student framework(MRT). The adaptive teacher-student baseline consists of a teacher model which takes weakly-augmented target images and produces pseudo labels, and a student model which takes strongly augmented source and target images, supervised by ground truth labels and pseudo labels respectively. Adversarial alignment are applied on backbone, encoder and decoder. Our proposed MAE branch masks feature maps of target images, and and reconstructs the feature by student encoder and an auxiliary decoder. Our proposed selective retraining mechanism periodically re-initialize certain parts of the student parameters as highlighted. The teacher model is updated only by EMA from the student model. Empirically, we use the teacher model at inference time.

Experimental Results

Our method achieves state-of-the-art performance on three benchmarks: cityscapes to foggy cityscapes(0.02) (city2foggy), sim10k to cityscapes(car) (sim2city), and cityscapes to bdd100k(daytime) (city2bdd).

Visulization of detection results demostrate the effectiveness of each module of our method.

Introduction Video

Citation

If you use MRT in your research or wish to refer to the results published in the paper, please use the following BibTeX entry.

@inproceedings{zhao2023masked,

title={Masked Retraining Teacher-Student Framework for Domain Adaptive Object Detection},

author={Zhao, Zijing and Wei, Sitong and Chen, Qingchao and Li, Dehui and Yang, Yifan and Peng, Yuxin and Liu, Yang},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV)},

pages={19039--19049},

year={2023}

}